Linear algebra is a fundamental area of mathematics that empowers various fields such as computer science and data analysis. By grasping its core concepts, you can significantly enhance your problem-solving skills and analytical capabilities. Moreover, visualizing complex data can be made easier with tools such as high-quality bag visuals, aiding in your understanding of the subject.

Linear algebra is a branch of mathematics that deals with vectors, vector spaces, and linear transformations. It may sound complex at first, but understanding its key concepts can open up a world of opportunities in various fields, including computer science, engineering, and data science. This article will simplify linear algebra, explore its applications, and provide insights into how it can be utilized in real-world scenarios.

The Basics of Linear Algebra

At its core, linear algebra focuses on the properties and operations of vectors and matrices. Here are some fundamental concepts:

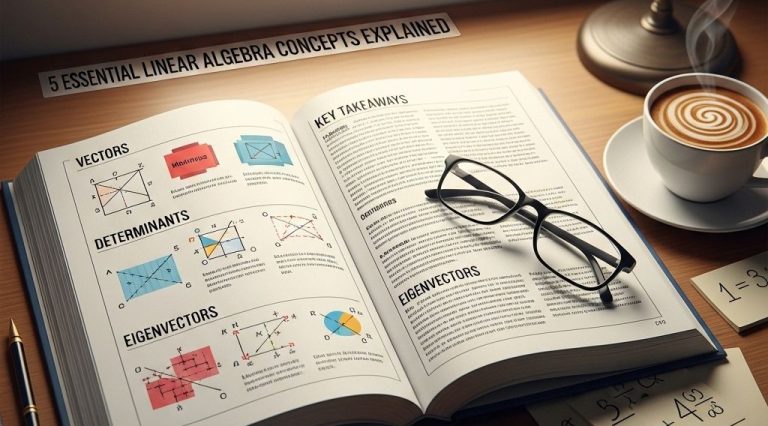

Vectors

A vector is an ordered array of numbers, which can represent points in space, directions, or quantities. For example:

- A 2D vector:

(x, y) - A 3D vector:

(x, y, z)

Matrices

A matrix is a rectangular array of numbers arranged in rows and columns. Matrices are used to perform linear transformations and represent systems of linear equations. For example:

| Column 1 | Column 2 | Column 3 |

|---|---|---|

| 1 | 2 | 3 |

| 4 | 5 | 6 |

Key Operations

Understanding basic operations on vectors and matrices is crucial in linear algebra. Below are some essential operations:

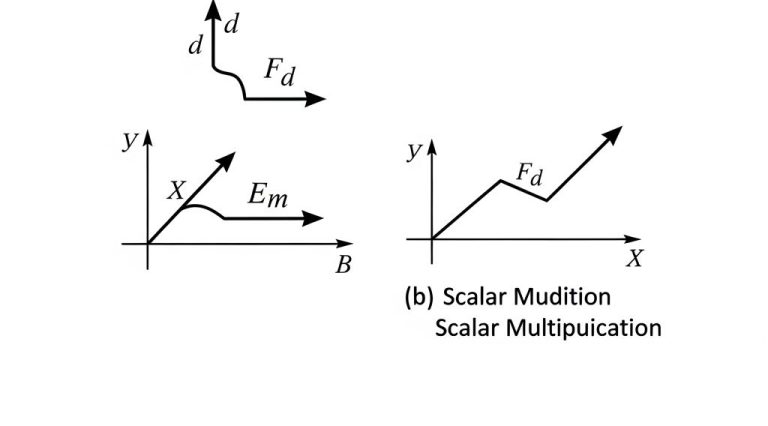

Vector Addition

Vectors can be added together by adding their corresponding components. For instance:

- Given vectors:

A = (2, 3)andB = (1, 4) - Vector addition:

A + B = (2+1, 3+4) = (3, 7)

Scalar Multiplication

Multiplying a vector by a scalar (a single number) involves multiplying each component of the vector by that scalar:

- Given vector:

A = (2, 3)and scalark = 3 - Result:

kA = (3*2, 3*3) = (6, 9)

Matrix Multiplication

Matrix multiplication is a bit more complex than vector addition, but it follows specific rules. When multiplying two matrices, the number of columns in the first matrix must equal the number of rows in the second. The resulting matrix will have dimensions defined by the rows of the first and columns of the second matrix. Here’s an example:

| Matrix A | Matrix B | |

|---|---|---|

| [1, 2] | * | [5] |

| [3, 4] | [6] |

Understanding Linear Transformations

Linear transformations are functions that map vectors to other vectors while preserving the operations of vector addition and scalar multiplication. They can be represented using matrices. Consider the transformation represented by matrix M:y = Mx, where x is an input vector and y is the transformed output vector.

Examples of Linear Transformations

Here are some common linear transformations:

- Rotation: Rotating a vector around the origin in a 2D space.

- Scaling: Changing the size of a vector while maintaining its direction.

- Shearing: Shifting one part of a vector in a specific direction while keeping the other part stationary.

Applications of Linear Algebra

Linear algebra plays a vital role in several fields, including:

Computer Graphics

In computer graphics, linear algebra is used to manipulate images and create 3D models. Through transformations, graphics programmers can rotate, scale, and translate images to create realistic animations.

Machine Learning

Machine learning algorithms often rely on linear algebra to process data. Techniques like Principal Component Analysis (PCA) use matrix operations to reduce the dimensionality of datasets while preserving essential features.

Data Science

Data scientists apply linear algebra to analyze and interpret large volumes of data. Matrix factorizations, such as Singular Value Decomposition (SVD), are critical for tasks like recommendation systems.

Conclusion

Understanding linear algebra is essential for anyone looking to delve into advanced mathematics, computer science, or data analysis. By comprehending the fundamentals of vectors, matrices, and linear transformations, you can unlock powerful tools for problem-solving across a wide array of disciplines. Embrace the beauty of linear algebra, and you will find it a rewarding and invaluable asset in your mathematical toolkit.

FAQ

What is linear algebra?

Linear algebra is a branch of mathematics that focuses on vector spaces and linear mappings between them, dealing with concepts like vectors, matrices, and systems of linear equations.

Why is linear algebra important?

Linear algebra is crucial in various fields including engineering, physics, computer science, economics, and statistics, as it provides tools for modeling and solving problems involving linear relationships.

What are vectors in linear algebra?

Vectors are quantities that have both magnitude and direction, represented as arrays of numbers. They can be used to describe points in space, forces, or any quantity that has direction.

What is a matrix?

A matrix is a rectangular array of numbers arranged in rows and columns that can represent linear transformations and systems of equations.

How are systems of equations solved using linear algebra?

Systems of equations can be solved using methods such as substitution, elimination, or matrix operations like Gaussian elimination to find the values of the variables.

What are eigenvalues and eigenvectors?

Eigenvalues and eigenvectors are concepts in linear algebra that help understand linear transformations; an eigenvector is a vector that does not change direction during the transformation, and its corresponding eigenvalue indicates how much it stretches or compresses.