In today’s rapidly changing tech landscape, understanding cutting-edge topics like quantum computing is essential. This revolutionary technology has the potential to transform various industries, much like mockup templates for bags can enhance creative projects. By grasping the principles of quantum mechanics, we can better appreciate how this innovation will shape our future.

In the rapidly evolving landscape of technology, quantum computing stands out as one of the most groundbreaking advancements. Unlike traditional computers that use bits as the smallest unit of data, quantum computers leverage the principles of quantum mechanics to process information in fundamentally different ways. This article delves into the intricacies of quantum computing, illuminating its potential and implications for various fields.

Understanding the Basics of Quantum Computing

To grasp the concept of quantum computing, it’s essential to understand the fundamental differences between classical bits and quantum bits, or qubits.

Bits vs. Qubits

| Feature | Classical Bit | Quantum Bit (Qubit) |

|---|---|---|

| State | 0 or 1 | 0, 1, or both (superposition) |

| Measurement | State collapses upon measurement | |

| Processing | Sequential | Parallel (due to entanglement) |

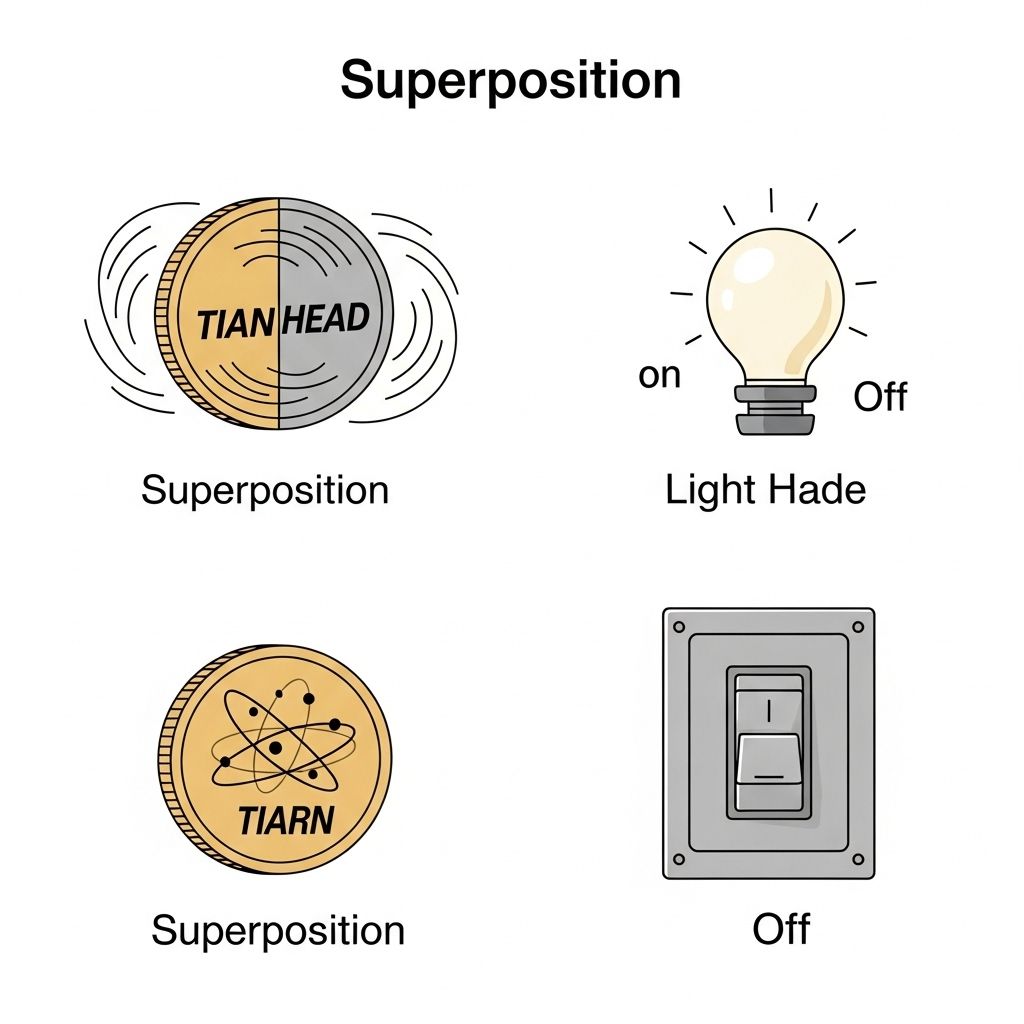

The Power of Superposition

At the core of quantum computing is the concept of superposition, which allows qubits to exist in multiple states simultaneously. This capability exponentially increases the computational power of quantum systems compared to classical systems.

Explaining Superposition

Imagine a spinning coin. While it’s in the air, it represents both heads and tails until it lands. In quantum computing, superposition allows qubits to represent multiple combinations of 0s and 1s, enhancing processing capabilities significantly.

Entanglement: A Quantum Marvel

Another critical principle of quantum mechanics is entanglement, where qubits become linked, such that the state of one qubit can depend on the state of another, regardless of the distance between them.

How Entanglement Works

Entangled qubits can perform complex computations more efficiently than classical computers. This interconnectedness enables faster data processing and communication.

Quantum Algorithms

Quantum computing isn’t just about hardware; it’s also about the algorithms that run on quantum machines. Notable quantum algorithms include:

- Shor’s Algorithm: Efficiently factorizes large numbers, posing a threat to current encryption methods.

- Grover’s Algorithm: Provides a quadratic speedup for unstructured search problems.

- Quantum Fourier Transform: A critical component in various quantum algorithms, including Shor’s.

Applications of Quantum Computing

The potential applications of quantum computing are vast and span multiple industries:

1. Cryptography

Quantum computing could break traditional encryption methods, necessitating the development of quantum-resistant algorithms.

2. Drug Discovery

Simulating molecular structures and interactions using quantum computers can accelerate drug discovery processes.

3. Optimization Problems

Industries such as logistics, finance, and manufacturing can benefit from quantum computing’s ability to solve complex optimization problems quickly.

4. Artificial Intelligence

Quantum computing can enhance machine learning algorithms, enabling more efficient processing of large datasets.

Challenges in Quantum Computing

Despite its promise, quantum computing faces several challenges:

- Qubit Stability: Qubits are sensitive to their environment, leading to decoherence and loss of information.

- Error Correction: Quantum error correction methods are complex and resource-intensive.

- Scaling: Building scalable quantum systems remains a significant hurdle for researchers.

Current State of Quantum Computing

As of late 2023, several companies and institutions are at the forefront of quantum computing research and development:

Leading Players

| Company/Institution | Notable Contributions |

|---|---|

| IBM | IBM Quantum Experience, Qiskit framework |

| Demonstrated quantum supremacy with Sycamore | |

| Microsoft | Quantum Development Kit and Azure Quantum |

| Rigetti Computing | Focused on quantum cloud computing solutions |

The Future of Quantum Computing

Looking ahead, the future of quantum computing is filled with both promise and uncertainty. As the technology matures, we can expect:

- Enhanced Hardware: Improvements in qubit fidelity and coherence times.

- Wider Adoption: More industries integrating quantum computing into their operations.

- New Discoveries: Ongoing research may uncover even more applications and capabilities.

In conclusion, quantum computing is set to revolutionize the technological landscape, offering solutions to problems currently deemed intractable. As researchers and engineers continue to push the boundaries of what’s possible, the implications of quantum computing will resonate across all sectors of society.

FAQ

What is quantum computing?

Quantum computing is a type of computation that uses quantum bits, or qubits, to perform calculations at speeds and complexities unattainable by classical computers.

How does quantum computing differ from classical computing?

Unlike classical computing, which uses bits as the smallest unit of data (0 or 1), quantum computing uses qubits that can represent and store information in multiple states simultaneously due to superposition.

What are qubits and why are they important?

Qubits are the fundamental units of quantum information, capable of being in multiple states at once. This property allows quantum computers to process vast amounts of data simultaneously, significantly speeding up certain calculations.

What are some potential applications of quantum computing?

Quantum computing has potential applications in cryptography, drug discovery, optimization problems, complex simulations, and artificial intelligence, among others.

Is quantum computing ready for practical use today?

As of now, quantum computing is still in the experimental stage, with ongoing research focused on error correction and scalability before it can be widely used for practical applications.

What are some challenges facing quantum computing?

Challenges include maintaining qubit coherence, error rates in quantum operations, and the need for advanced algorithms that can leverage quantum advantages over classical computing.